Teams and Projects

IGFAE

The group develops advanced techniques for fast data reconstruction in high-energy physics, with a focus on the LHCb experiment at CERN. Their research explores the use of GPUs and Graph Neural Networks (GNNs) to improve the trigger system and tracking of long-lived and strange particles.

They also work on electron reconstruction in the LHCb VELO detector, DAQ integration between LHCb and the proposed Codex-b experiment, and automatic optimization of detector design.

Team members: Xabier Cid (IP), Saul López, Emilio Xosé Rodríguez, Miguel Fernández, Ángel Morcillo, María Pereira.

FITNAE

The group focuses on fast data reconstruction and flavor tagging in high-energy physics, particularly within the LHCb experiment at CERN. Their research involves the use of GPUs to accelerate the trigger system and downstream tracking for strange particles, as well as the exploration of quantum computing techniques for track reconstruction.

They also develop methods for the fast simulation of particle detectors, create event displays, and conduct offline data analysis on GPUs.

Team members: Diego Martinez Santos (IP), Veronika Chobanova, Carlos Vázquez, Claire Prouve, John Wendel, Axel Iohner, Laura Castro, Sergio Andres Estrada, Joel Sanchez, Gabriel Alejandro Fernández

ICTEA

The group works at the intersection of high-energy physics and data science, developing intelligent algorithms for the CMS Muon Hardware Trigger and novel information processing paradigmas for the readout of hadronic calorimeters. Their research includes the implementation of machine learning (ML) techniques on FPGAs, the codesign and implementation of ML techniques in neuromorphic hardware, and the application of ML methods to data analysis within the CMS experiment at CERN.

They also explore new computing strategies to enhance the performance of the Muon Hardware Trigger and employ AI-based anomaly detection to identify rare or unexpected events in experimental data.

Team members: Pietro Vischia (IP), Bárbara Alvárez González, Javier Cuevas Maestro, Javier Fernández, Santiago Folgueras, Isidro González Caballero, Juan Rodrigo González Fernández, Enrique Palencia Cortezón.

IFCA

The group develops reconstruction algorithms and machine learning techniques for the CMS experiment at the Large Hadron Collider (LHC), focusing on high-momentum muons, displaced tracks, and systematic uncertainties in ML models.

Their work includes fast Monte Carlo production using Generative Adversarial Networks (GANs) and the design of imaging algorithms for muon tomography based on machine learning and differential programming. They also explore applications of quantum computing to high-energy physics, such as vertexing and tracking with the Variational Quantum Eigensolver (VQE) algorithm, as well as cloud computing and resource optimization for large-scale data processing.

Team members: Pablo Martinez Ruiz del Árbol (IP), Alicia Calderón Tazón, Jónatan Piedra Gómez, Francisco Matorras Weinig, Ibán Cabrillo Bartolomé, Rubén López

Ruiz, Sergio Blanco, Pablo Matorras.

LA SALLE

The group applies artificial intelligence (AI) and machine learning (ML) techniques to enhance event reconstruction and data analysis in the LHCb experiment at CERN. Their work focuses on calorimeter reconstruction, studying the impact of radiative decays, and developing advanced tracking algorithms. They also explore the use of GPUs to significantly improve computational performance and processing speed.

Team members: Elisabet Golobardés (IP), Miriam Calvo, Núria Valls, Sergi Bernet, Xavier Vilasís.

ICC

The group focuses on high-energy physics and the development of real-time trigger systems for the LHCb experiment at CERN. Their research involves real-time reconstruction of calorimeter objects, as well as the development and calibration of reconstruction and particle identification algorithms. They also apply machine learning (ML) to improve trigger selections, data analysis, and reconstruction techniques, aiming to enhance both the efficiency and precision of real-time event processing.

Team members: Carla Marín (IP), Ricardo Vázquez, Lukas Calefice, Alessandra Gioventú, Felipe Souza, Paloma Laguarta, Pol Vidrier, Aniol Lobo, Albert Lopez, Alejandro Rodríguez, Ernest Olivart.

IFIC

The group performs research across high-energy physics, neutrino physics, nuclear and medical physics, and applied physics, with a strong emphasis on machine learning (ML), quantum computing, and advanced computing technologies. Their work includes applying deep learning techniques on GPUs and CPUs for physics analysis at the LHC, as well as developing real-time signal reconstruction algorithms using FPGAs in pile-up dense environments.

They contribute to the design of real-time trigger systems for ATLAS and LHCb, exploring innovative hardware and quantum architectures to enhance data processing and decision-making. The group also applies ML algorithms to neutrino telescope analyses, and makes use of high-performance computing (HPC), cloud computing, and networking to exploit opportunistic resources for large-scale event simulation. Their broader research includes the development of ML methods for anomaly detection, time series analysis, natural language processing, and symbolic regression.

Team members: Arantza Oyanguren (IP), Luca Fiorini (IP), Álvaro Fernández, Miriam Lucio, Jiahui Zhuo, Valerii Khloimov, Jose Mazorra, Izaac Sanderswood, Juan Antonio Valls, Aránzazu Ruiz, Alberto Valero, Fernando Carrió, Adam Bailey, Antonio Gomez, Kieran Amos, Arya Aikot, Susana Cabrera Urbán, Salvador Martí García, Francisco Salesa, Agustín Sánchez, Santiago González de la Hoz, Bryan Zaldivar, Carlos Garcá, Carlos Ecobar, Verónica Sanz, Emma Torró, Miguel G. Folgado.

ETSI

The group’s research focuses on parallel computing methods, intelligent algorithms, and machine and deep learning techniques, with applications in data science, applied physics, and high-energy physics. Their work also explores bio-inspired models of computation and sustainable systems, designing computational frameworks such as membrane systems, cellular automata, and multi-agent systems to address complex problems in biology and physics.

They develop parallel simulators to enable high-performance experimentation, and work on optimizing deep learning frameworks for sparse tensors. The group also applies deep learning models to computer vision and natural language processing, and investigates in-network computing with DPUs to achieve low-energy, efficient processing in surveillance and real-time environments.

Team members: Daniel Cagigas Muñiz (IP), Miguel Ángel Martínez del Amor,

Jose Luis Guisado, Fernando Díaz Del Río, Alejandro Linares Barranco, Gabriel Jiménez Moreno, Antonio Abad Civit Balcells, Antonio Ríos Navarro, José Luis Sevillano Ramos, David Orellana Martín, Daniel Hugo Cámpora Pérez, Agustín Riscos Núñez, Miguel Ángel Gutiérrez Naranjo, José Rafael Luque Giráldez, Maria José Morón Fernández.

CIEMAT

The group focuses on high-energy physics (HEP) and data science, developing intelligent algorithms for the CMS Muon Hardware Trigger and physics object reconstruction for prompt luminosity measurements in the CMS experiment. Their work includes real-time signal reconstruction for event selection, low-latency acceleration on high-performance platforms, and the implementation of machine learning techniques on FPGAs.

They address the computing challenges of the High-Luminosity LHC (HL-LHC), studying the metrics and resource usage of HEP workloads to guide efficient system design. This involves profiling workflows and characterizing workloads in terms of core computing capabilities such as storage, memory, networking, computational performance, latency, and bandwidth.

The group also leads R&D activities within the Data Organization, Management and Access (DOMA) initiative, including the development of storage federations in Spain (between CIEMAT and PIC) and the integration of data caches to improve storage efficiency and reduce deployment costs. Additionally, they work on adapting CMS simulation workflows to fully exploit high-performance computing (HPC) resources.

Team members: Cristina Fernández Bedoya (IP), Ignacio Redondo Fernández, Álvaro Navarro Tobar, David Daniel Redondo Ferrero, José María Hernández Calama, José Flix, Antonio Pérez-Calero.

IPARCOS

At this High Energy Physics group, research focuses on two main goals: event reconstruction and trigger systems for Imaging Atmospheric Cherenkov Telescopes (IACTs). For event reconstruction, the group develops advanced deep learning and machine learning algorithms to improve the offline analysis of IACT data, aiming to enhance the overall performance and sensitivity of these instruments. For trigger systems, and building on their extensive experience in IACT instrumentation, they are working to deploy ML algorithms directly into the trigger hardware. Within the framework of the Cherenkov Telescope Array Observatory (CTAO), the group is designing a fully digital trigger system based on Silicon Photomultipliers (SiPMs) and Field Programmable Gate Arrays (FPGAs).

This approach seeks to boost real-time decision-making sensitivity and efficiency, while meeting strict latency requirements, effectively bridging the gap between offline deep learning reconstruction and on-detector intelligent triggering.

Team members: Daniel Nieto (IP), Juan Abel Barrio, Jose Luis Contreras, M. López, L.A. Tejedor, A. Cerviño, J. Buces, D. Martín, A. Pérez, M. Molina.

CAPA

The group, part of the Center for Astroparticle and High Energy Physics (CAPA), works in a highly multidisciplinary environment that bridges high-energy physics, nuclear and particle physics, astrophysics, cosmology, astroparticle physics and theoretical physics, as well as the associated technological developments. Its research combines detector technology and data analysis to address fundamental questions in physics beyond the Standard Model.

The group develops advanced signal reconstruction, event classification, and background rejection techniques for a wide range of rare-event search detectors, including scintillators, gaseous and liquid time projection chambers (TPCs). Their work encompasses pulse shape discrimination, tracking and topological analysis, and digital signal processing algorithms implemented on FPGAs. All these techniques are currently applied in rare-event experiments as ANAIS, IAXO or TREX-DM.

They apply machine learning methods to uncover correlations in astroparticle physics experiments such as IceCube and KM3NeT, targeting signatures of physics beyond the Standard Model. The group also develops ML and Bayesian inference approaches for density and image reconstruction in muon tomography (e.g., MLEM), and applies machine learning to global fits and observable correlation studies in flavor physics experiments like LHCb.

In addition, the group hosts the software coordination the IAXO experiment and leads the development of the REST-for-physics framework, a modular software suite for event data processing and analysis in rare-event detection experiments.

Team members: Héctor Gómez (IP), Jorge Alda, Swadheen Bharat, José Manuel Carmona, Iván Coarasa, Theopisti Dafni, Javier Galindo, María Martínez, Alejandro Mir, Siannah Peñaranda, María Luisa Sarsa. Carmen Seoane.

Infrastructures

- ARTEMISA: ARTificial Environment for Machine Learning and Innovation in Scientific Advanced Computing.

ARTEMISA is a state-of-the-art computing infrastructure located at the Instituto de Física Corpuscular (IFIC), designed to accelerate research in machine learning (ML) and artificial intelligence (AI). It is a heterogeneous GPU-based facility that combines high-performance computing with advanced data-processing capabilities. The system currently includes 45 NVIDIA GPUs, distributed across 33 servers equipped with Volta V100 or Ampere A100 GPUs, as well as a dedicated 8-GPU server for large-scale parallel workloads. In addition, ARTEMISA features a next-generation CPU cluster and high-capacity storage system, ensuring fast, reliable data handling.

Users can access two interactive interfaces for software testing and development prior to large-scale execution in batch mode. Open to the entire Spanish research community, ARTEMISA supports researchers across disciplines in developing, training, and deploying AI and ML models. Currently, around 20 research groups from diverse scientific domains make active use of the infrastructure, driving innovation in data-intensive research.

Team members: Jose Enrique García Navarro (PI), Javier Sánchez - PIC: Port d’Informació Científica.

The PIC is a scientific data center located in Cerdanyola del Vallès (Barcelona, Spain), jointly managed by the Institut de Física d’Altes Energies (IFAE) and the Centro de Investigaciones Energéticas, Medioambientales y Tecnológicas (CIEMAT). PIC supports scientific collaborations that require large-scale computing resources for the storage, processing, and analysis of massive distributed datasets.

Its high-throughput infrastructure includes more than 10,000 computing cores, a tape storage system with 4,500 slots providing 41 PB of archival capacity, and 15 PB of disk storage distributed across 60 servers. Additionally, PIC hosts 19 GPUs (including 8 × NVIDIA 2080 Ti, 8 × V100, and 1 × Titan V) dedicated to artificial intelligence (AI) and machine learning (ML) research.

PIC functions as a Tier-1 data center for the Large Hadron Collider (LHC), serves as the Spanish data center for the Euclid satellite mission, and provides services to a wide range of scientific projects across disciplines. Since 2020, it has also been part of the Spanish Supercomputing Network (RES).

The AI research group at PIC works interdisciplinarily on applying AI to scientific data. Their expertise covers astronomical image processing, unsupervised image denoising, and collaborations in materials science, biological imaging, gravitational waves, neutrino physics, and quantum computing. The team also helps define the optimal hardware and software infrastructure for AI within PIC’s high-throughput environment. Through these initiatives, PIC plays a key role in advancing AI adoption in particle physics and other data-intensive research domains.

Team members: Martin Børstad Eriksen (PI), Gonzalo Merino, Francesc Torradeflot, Laura Cabayol, Hanyue Guo, Jiefeng Chan

Projects

- HIGH-LOW: Design of high performance algorithms for low power sustainable hardware for LHC experiments and their upgrades. TED2021-130852B-I00. PIs: L. Fiorini, A. Oyanguren

- HEP generators in hybrid architectures. PIs: L. Fiorini, S. Folgueras, A. Oyanguren, A. Valero.

- Allen framework of the HLT1 at LHCb. PIs: X. Cid Vidal, C. Marín, D. Martínez Santos. A. Oyanguren, X. Vilasis.

- Design and implementation of algorithms for the barrel muon of CMS. PIs: S. Folgueras, C. Martínez.

- Development of Artificial Intelligence algorithms in FPGAS. PIs: L. Fiorini, C. Martínez.

- BUSCA: Buffer Scanner to detect LLPs at LHCb. PIs: X. Cid Vidal, C. Marín, D. Martínez Santos. A. Oyanguren, X. Vilasis.

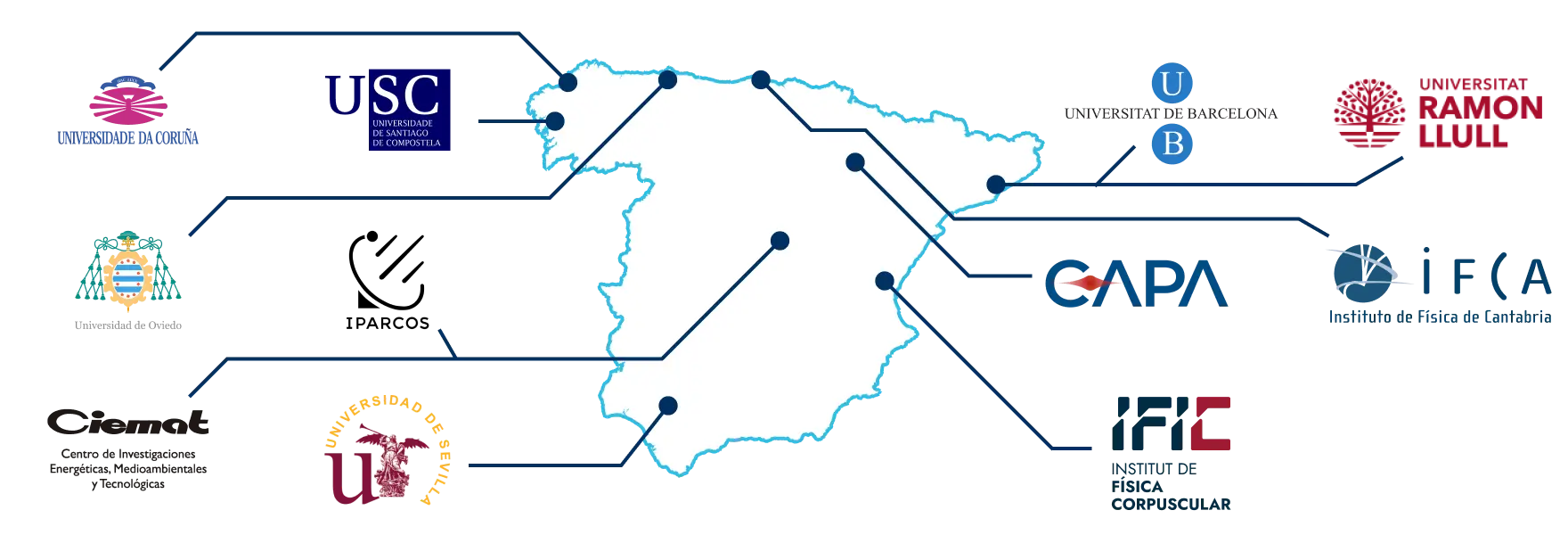

Connections

- CPAN

The Centro Nacional de Física de Partículas, Astropartículas y Nuclear (CPAN) is a strategic network supported by Spain’s Agencia Estatal de Investigación, uniting the country’s leading research groups in particle, astroparticle, and nuclear physics. CPAN fosters national and international collaboration in frontier experiments, such as at CERN and major cosmology or neutrino projects, while coordinating shared strategy, infrastructure, and technological development. It brings together the Spanish communities in particle, astroparticle, and nuclear physics to discuss progress, challenges, and future directions. - IRIS-HEP

The Institute for Research and Innovation in Software for High Energy Physics (HEP), funded by the U.S. National Science Foundation, is developing state-of-the-art software cyberinfrastructure required for the challenges of data intensive scientific research at the High Luminosity Large Hadron Collider (HL-LHC) at CERN, and other planned HEP experiments of the 2020’s. - HSF

The HEP Software Foundation is a global, community-driven organization that fosters coordination and shared development efforts in software and computing for high-energy physics (HEP). It brings together scientists, software engineers, and institutions to address common challenges across experiments and domains. HSF organizes working groups, training programs, community events, and maintains a knowledge base to support collaborative innovation. - AIHUB

This Artificial Intelligence HUB is a network initiative of the Spanish National Research Council (CSIC) that brings together over 400 researchers from more than 80 research groups across 40 CSIC centers, spanning disciplines such as AI, physics, life sciences, microelectronics, astronomy, and philosophy. The HUB aims to foster collaboration in research, training, technology transfer, and public engagement, acting as a point of connection between CSIC centers, external institutions, industry, and society. Its mission includes consolidating a scientific network in AI towards 2030, promoting young talent, and ensuring ethical and socially aligned development of AI technologies. - HSF-India

This is a collaborative initiative with support from the U.S. National Science Foundation to strengthen international research software partnerships between India, the United States, and Europe. The program focuses on advancing open, sustainable, and high-performance software for high-energy, nuclear, and astroparticle physics research.